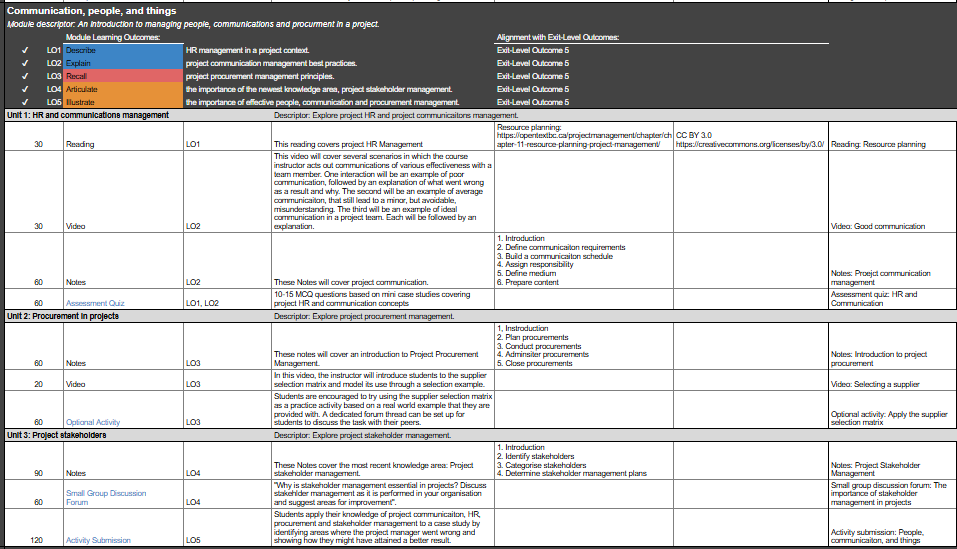

In two previous exercises I referenced a rough draft of a learning design plan (LDP) that I created for an introductory course on project management (view this LDP here). As this was created as an exercise to demonstrate underlying principles and skills only, the actual content of the course leaves much to be desired, and there is plenty of room for improvement in the initial design.

This got me thinking about the E of the ADDIE model: Evaluation.

Evaluation should occur on an ongoing basis. During Design, for example, quality assurance should have taken place to ensure that the LDP was logical, comprehensive, and representative of a well structured and high quality course. After the course is presented to students, a formal evaluation should take place to identify any areas for improvement or any elements that didn’t work.

In my line of work, I am used to performing regular, in-depth evaluations on an annual basis of all existing courses. Although part of this involves picking up on and correcting errors or bad practice, these evaluations are also largely focused on identifying opportunities for innovation and improvement.

So, how would this course hold up in a formal evaluation?

Alignment and level of exit-level and module-level outcomes

As demonstrated previously, at face value the module-level outcomes align perfectly with the exit-level outcomes (in terms of Bloom’s Revised Taxonomy). There are no modules that are pitched, overall, at a higher or lower level than the exit-level outcome with which they are associated. This is one of the primary problem areas to look at when evaluating a course.

The exit-level outcomes formulated for the course: Analysis

Distribution of Modules in terms of exit-level outcome and highest module-level outcome covered: Analysis

Of course, this assumes that the initial Design of the course was sound. In reality, this LDP would have been assessed and QA’d by a senior instructional designer, as well as by the subject-matter expert, and perhaps even a faculty member or representative from the client/partner institution. However, even when the most rigorous design and development processes are followed, course evaluators always need to be on the look out for potential enhancements that could be made.

When looking at the course in more detail, however, this alignment may only be a superficial relationship; we’ve essentially determined that the colours match up, but what about the actual substance of the outcomes and associated activities?

Take Module 4 as an example. Module 4 is indicated as being aligned with exit-level outcome 4, as shown here:

(Orange in this template indicates the Apply level of Bloom’s Taxonomy).

Let’s take a look at this in more detail: the exit-level outcome clearly references the importance of effective risk management in a project, while the module-level outcomes it supposedly aligns with only covers the step-by-step mechanics of managing risk in a project. While the content itself might imply the importance of effective risk management (and we’ll see later that it doesn’t), the actual outcomes do not actually align.

What would the recommendation be for dealing with this? I would suggest that the subject-matter expert and an instructional design expert (because SMEs don’t always know how to make good design decisions) take a close look at this exit-level outcome (as well as any others that are problematic) and decide if the outcome does, indeed, reflect the vision for the course, i.e. is this really something that students need to be able to do? If it is decided that the exit-level outcome is essential in it’s current form, then Module 4 will need to be reworked thoroughly. If it is decided that the skill of using the step-by-step risk management process is more important, then the exit-level outcome itself would simply have to be reworded (perhaps to something like “Apply the basic steps in the project risk management process”).

Measurable verbs

It is essential that the verbs used in learning outcomes are measurable. “Discover” or “Understand” are examples of verbs that are not measurable. How do you test whether or not someone has discovered something? How do you know if a student has understood a concept as opposed to simply regurgitating the correct section of facts?

As this example is based on a template, each of the verbs used is from a pre-conceived list carefully selected for measurability. This course would get a tick against this criterion, but the deeper problems with the learning outcomes would still need to be addressed.

Alignment of components with module-level outcomes

It is essential that the verbs used in a learning outcome are actually achievable through the components against which those outcomes are aligned. This is less important for supportive materials such as notes or videos. These materials are didactic and passive in nature; students are simply required to absorb the information in preparation for achieving an assessment of some kind. On the other hand, it is absolutely essential that learning outcomes align directly with assessments, as this is the point at which students demonstrate the desired skill set.

You cannot “Discuss” in a quiz or “Assemble an engine” in an essay. The verbs you choose are important in determining how you will test your students.

Let’s take a look at Module 5 as an example of this:

Unit 1 covers learning outcomes 1 and 2 for this module. The Reading, Video and Notes could all be said to align with these two outcomes, as they are supportive materials. The problem is the assessment quiz. In a multiple choice quiz format, how is a student supposed to “Describe” or “Explain” anything? They have no agency or ability to input any information beyond the quiz options they are presented with.

Here I would recommend that the learning outcomes are assessed, and a decision made regarding whether or not the two skills mentioned are, in fact, the most important skills students need to demonstrate in that unit. If not, the learning outcomes need to change to reflect the real desired outcomes. If, however, they do reflect the desired outcome of the unit, then the assessment needs to be replaced in its entirety.

Although the LDP serves as a fantastic roadmap when evaluating a course, it is important to assess the actual content covered in each module as well. Returning to the example of Module 4 discussed previously, you can see that the content mirrors the module-level outcomes perfectly, but that it does not reach that all-important exit-level outcome at all.

In a real course, the actual content for each of the above components would also have been analysed to ensure that the information specified in design reflects what is actually presented to students on the LMS.

Speaking of the LMS, consider the very rough content that has been added for Module 1, using the LearnPress plug-in. If this was the “real” content (and not just a place holder used to demonstrate the concept of a learning path on an LMS), the entire module would be thrown out by the instructional designer due to poor quality, misalignment between learning outcomes and the actual content provided to students, and lack of originality.

Estimated learning times

The estimated learning times for each component should also be assessed during evaluation, particularly if these are communicated to students (such as in the Module 1 example). If a video is 6 minutes long, students should not be told that it will take them 20 minutes. Likewise, the Unit 2 Notes are shorter than the Unit 1 Notes, but they’ve been assigned 50% more learning time.

The reason why this needs to be evaluated and corrected is due to the need for effective expectation management (which applies to offline as well as online courses). Students will plan their time around how long we tell them a component will take, and this needs to be accurate to avoid frustration.

Quality of content

The quality of the content needs to be assessed. One aspect of this is ensuring that all information is still up to date. For example, in a course on using social media to market your business, you will find that information on or references to Facebook will require multiple updates every year (a factor that would need to be planned into your overall evaluation schedule). The more fast-paced the industry, the more often, and more extensively, you will have to update the content.

In terms of the example used here, the core project management body of knowledge is likely to remain fairly stable over time, while aspects that relate to people management may need to be updated as management and leadership norms evolve.

Quality also refers to the correctness, comprehensiveness, and the content’s ability to add value. If an additional video is included, what does it add to the educational experience? At the most basic level, is all of the information included correct, and does it represent the complete set of knowledge needed to fully appreciate and understand a required section of work? Is it well written? Are the sources used reputable (the Wikipedia content used in the place holder example for Module 1 would fail on this point)?

Quality of engagement on discussion forums

Participation reports can usually be pulled on most LMSs showing how students engaged on the discussion forums. If discussion prompts are planned in advance and deliberately added to the learning path, these need to be assessed for efficacy. If interaction on a post is extremely low, what are the reasons for this? Is it a bad, boring or stupid question? Do students have too much else to do in that module to focus on a discussion prompt? Have discussions been adequately positioned as essential value-adds (and do they add value)? If a post has really high interaction, why might that be? If the reasons behind this are pedagogically valid, should we try to mirror the style or wording of the discussion prompt in other modules? If the reason for high interaction is more social than educational, what is the value of this? Is it a distraction, or a much needed mental break?

Based on the answers to the above questions, discussion prompts would then be updated.

Average grades and quality of assignment instructions and content

The average grades for all assignments and quizzes should be collated in one easy-to-understand graph where it is immediately obvious which assessments are problematic. Here, you are looking for outliers. For example, if all of the assessments have an average grade around the 65% mark, but one assessment is at 24%, what is the reason for that? It could be that the assessment is too difficult for students at that level. It could be that the assessment instructions do not properly prepare students for what they are expected to do on the assignment. Or it could be that their workload in that particular module or week is too high for them to spend the time needed on that assessment. Whatever the reason, that assessment needs to be reviewed in detail. Note that it’s not bad practice to have one extra-challenging assignment; you simply have to ensure that the assignment is not asking for more than students are capable of.

If all marks are too low, the positioning or difficulty of the entire course may need to be assessed. If the course is pitched as an introductory course (as in our example), students cannot be asked to perform tasks more suited to experienced project managers. For example, perhaps the final assessment, where students actually compile a project plan, is asking too much for this level of course. This doesn’t mean that students can’t work on a project plan at some level, but they would need a great deal of additional support. Rather than creating a plan from scratch, assessment could, for example, involve filling in missing information to a plan that we provide them.

If all the marks are extremely high this could be a sign that the course is too easy, and therefore worthless. This will be more or less worrying, depending on what kind of course it is and how it is offered. A course at university, for example, should have an average of 65% (South African standards). It should be difficult for students to reach the 75% mark at university-level, and anything higher than 80% is considered excellent. On the other hand, if you’re designing a free and open course aimed at addressing the fundamentals of a simple topic, a high assessment average might be more desirable.

Technological difficulties

Finally I would review the course to identify any technological difficulties that arose, as well as looking at ways to make technological improvements to enhance the learning experience for the student. As this “course” has not actually been implemented (the I of ADDIE), I am unable to assess this area as part of this exercise.

The final verdict: If you came across my website while looking for lesson plans for project management, think twice before using the rough example used here.